Haskell in Green Land: Analyzing the energy behavior of a purely functional language

This post is a summary of a paper currently under submission. This work was conducted in cooperation with my colleague Joao Paulo Fernandes from UBI, Portugal, and students Luís Gabriel Lima, Francisco Soares-Neto, and Paulo Lieuthier from UFPE and Gilberto Melfe from UBI.

Researchers have been studying energy efficiency for hardware components for a long time. However, more than 20 years ago, the well-known paper [1] by Tiwari, Malik, and Wolfe stated that it is either impractical or impossible to use the lower level tools to estimate the power cost of the software component of the system, in the context of embedded software. Apparently, lots of people agree, since the paper has more than 1100 citations at Google Scholar. In the last few years, the growing worldwide movement towards sustainability, including sustainability in software, combined with the systemic nature of energy efficiency as a quality attribute and the widespread adoption of mobile, battery-reliant devices have motivated the study of the energy impact of application software in execution. This tendency has led researchers to evaluate existing techniques, tools, and languages for application development from an energy-centric perspective. Recent work has studied the effect that factors such as code obfuscation [2], Android API calls [3], object-oriented code refactorings [4], constructs for concurrent execution [5], and data types [6] have on energy efficiency. Analyzing the impact of different factors on energy is important for software developers and maintainers. It can inform their decisions about the best and worst solutions for a particular context. Moreover, it is important to make developers aware that seemingly small modifications can yield considerable gains in terms of energy. For example, a study [3] by Vasquez et al. has discovered that some Android API calls consume 3000 of times more energy than the average Android API call. These API calls should clearly be avoided if possible and energy is an important requirement.

We have decided to explore an additional dimension. More specifically, we study the energy behavior of programs written in a lazy, purely functional language, namely, Haskell. Functional languages, in general, include a number of features that are not generally available in imperative programming languages. In particular, Haskell has mature implementations of sophisticated features such as laziness, partial functional application, software transactional memory, tail recursion, and a kind system. Furthermore, recursion is the norm in Haskell programs and side effects are restricted by the type system of the language. Due to all these differences it is possible that programs written in such a language behave differently from those written in imperative languages, from an energy perspective. As a sidenote, if you’re not familiar with Haskell, I highly recommend you spend some quality time with it. It’s a language that, in the worst case, will make you think a little differently about programming and, in the best, will make you fall in love head over heels. Miran Lipovaca’s great book is a nice starting point.

We analyze the energy efficiency of Haskell programs from two different perspectives: strictness and concurrency. By default, expressions in Haskell are lazily evaluated, meaning that any given expression will only be evaluated when it is first necessary. This is different from most programming languages, where expressions are evaluated strictly and possibly multiple times. In Haskell, it is possible to force strict evaluation in contexts where this is useful. This is very important to analyze the performance and energy efficiency of Haskell programs. As for concurrency, previous work [5,7] has demonstrated that concurrent programming constructs can influence energy consumption in unforeseen ways. We attempt to shed more light on this complex subject. More specifically, we address the following high-level research question:

- To what extent can we save energy by refactoring existing Haskell programs to use different data structure implementations or concurrent programming constructs?

To gain insight into the answer to this question, we conducted two complementary empirical studies. In the first one we analyzed the performance and energy behavior of several benchmark operations over 15 different implementations of three different types of data structures. Even though Haskell has several implementations of well-known data structures, we are not aware of any experimental evaluation of these implementations. In the second one we assessed three different thread management primitives and three constructs for data sharing using nine benchmarks and multiple experimental configurations. To the best of our knowledge, this is the first study of its kind targeting Haskell’s concurrent programming constructs. Overall, experimental space exploration comprises more than 2000 configurations and 20000 executions.

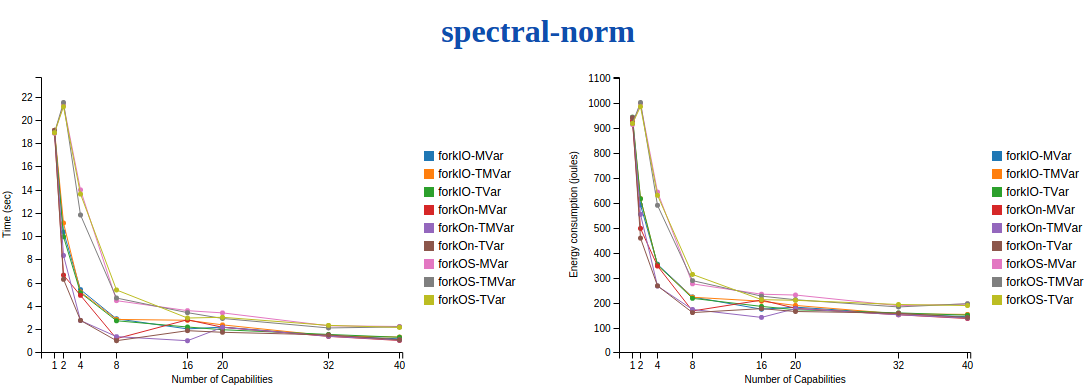

We found that small changes can make a big difference in terms of energy consumption, specially when considering Haskell’s primitives for thread management. In one of our benchmarks, spectralnorm, using forkOn with TVar to create new threads, instead of forkOS, can save between 25 and 57% energy. The graph below presents results for both time and energy for this benchmark, when varying the number of available virtual processors (capabilities) used by the Haskell runtime in a machine with 20 physical cores. We observed similar results, though not necessarily using the same thread management primitives, for 5 other concurrent benchmarks. This is very good news for developers. Switching between thread management primitives is very simple in Haskell. Functions forkOn, forkIO, and forkOS take a computation of type IO as parameter and produce results of the same type. Thus, the only difficulty is in determining on which capability a thread created via forkOn will run. For most practical cases, this is a trivial decision.

We observed a similar phenomenom for data-sharing constructs (MVar, TMVar, TVar). In one of our benchmarks, under a specific configuration, choosing one data sharing construct, MVar, over another, TMVar, can yield 60% energy savings.

Nonetheless, neither for thread management primitives nor for data sharing constructs, there is a universal winner. The results vary depending on the characteristics of each program. In another benchmark, TMVars can yield up to 30% energy savings over MVars. Alternating between data sharing primitives is not as easy as doing so for thread management primitives, but still not hard, depending on the characteristics of the program to be refactored. Going from MVar to TMVar and back is straightforward because they have very similar semantics. For the transitions from TMVar to TVar and from MVar to TVar, things get trickier because the semantic distance from TVar to the other two constructs when condition-based synchronization comes into play is considerable. In this case, it is possible that a more in-depth analysis of the program behavior will be necessary. Nonetheless, save for these trickier cases, tools that support developers in quickly refactoring a program to switch between different primitives can be of great help if energy is a concern.

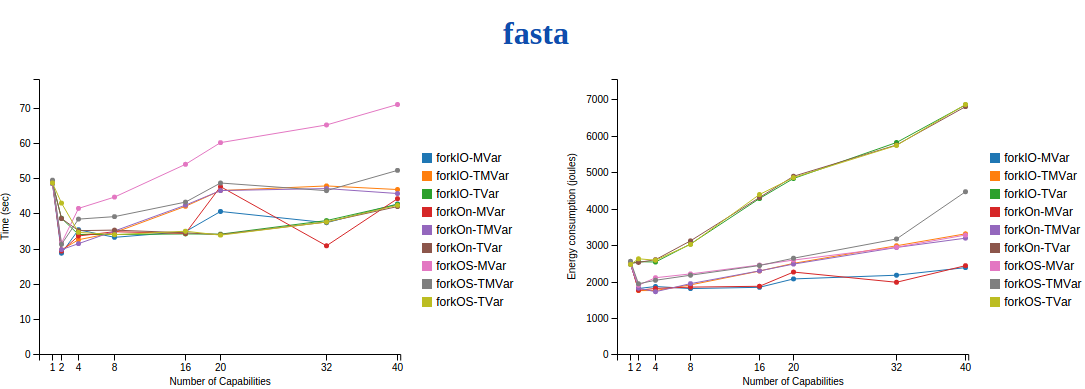

In addition, the relationship between energy consumption and performance is not always clear. Generally, specially in the sequential benchmarks, high performance is a proxy for low energy consumption. Nonetheless, when concurrency comes into play, we found scenarios where the configuration with the best performance (30% faster than the one with the worst performance) also exhibited the second worst energy consumption (used 133% more energy than the one with the lowest usage). The following graphs illustrate this point in the context of the fasta. In both cases, a lower value is better. In the graph on the left-hand side, depicting performance, the lines for the TVar variants of the benchmark are among the lowest ones. In the graph on the right-hand side, the lines for TVar exhibit the highest values for energy consumption.

To support developers in better understanding this complex relationship, we have extended two existing tools for performance analysis to make them energy-aware. The first one is the Criterion benchmarking library, which we have employed extensively in the two studies. The second one is the profiler that comes with the Glasgow Haskell compiler.

The data for this study, as well as the source code for the implemented tools can be found here. We will soon make a preprint of our paper available. If you’re interested, don’t hesitate to contact us.

####References

[1] V. Tiwari, S. Malik, and A. Wolfe, ``Power analysis of embedded software: a first step towards software power minimization’’, Very Large Scale Integration (VLSI) Systems, IEEE Transactions on, vol. 2, no. 4, pp. 437-445, Dec 1994.

[2] C. Sahin, P. Tornquist, R. Mckenna, Z. Pearson, and J. Clause, ``How does code obfuscation impact energy usage?’’. In 30th IEEE International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, September 29 - October 3, 2014, 2014, pp. 131-140.

[3] M. L. Vasquez, G. Bavota, C. Bernal-Cardenas, R. Oliveto, M. D. Penta, and D. Poshyvanyk, ``Mining energy-greedy API usage patterns in android apps: an empirical study’’. In 11th Working Conference on Mining Software Repositories, MSR 2014, Proceedings, May 31 - June 1, 2014, Hyderabad, India, 2014, pp. 2-11.

[4] C. Sahin, L. Pollock, and J. Clause, ``How do code refactorings affect energy usage?’’. In Proceedings of the 8th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, 2014, pp. 36:1-36:10.

[5] G. Pinto, F. Castor, and Y. D. Liu, ``Understanding energy behaviors of thread management constructs’’, In Proceedings of the 2014. ACM International Conference on Object Oriented Programming Systems Languages & Applications. Portland, USA, 2014, pp. 345-360.

[6] K. Liu, G. Pinto, and Y. Liu, ``Data-oriented characterization of application-level energy optimization’’. In Proceedings of the 18th In- ternational Conference on Fundamental Approaches to Software Engineering, LNCS vol. 9033, 2015, pp. 316-331.

[7] A. E. Trefethen and J. Thiyagalingam, ``Energy-aware software: Challenges, opportunities and strategies’’. Journal of Computational Science, vol. 4, no. 6, pp. 444 - 449, 2013.